The annoyance we experience when trying to view a website that doesn’t load is familiar to everyone. The latency that drives us crazy is often due to increased traffic that isn’t distributed efficiently, leading to overloaded servers and crashing websites. The reliability and performance of web applications are crucial today, and seconds of latency can mean lost customers. That’s why load balancing plays such an important role.

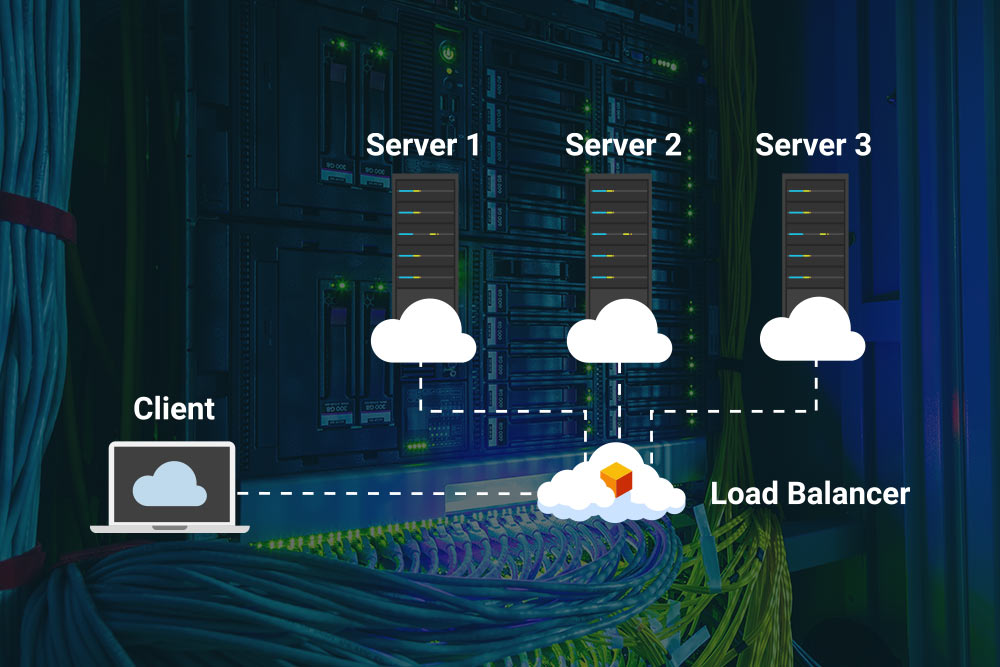

What does load balancing do? In a nutshell, it distributes incoming network traffic across a pool of servers, preventing downtime and bottlenecks and improving customer experience. In this article, we will unpack load balancing, explain how it works, and explain how your IT infrastructure can benefit. Let’s begin.

What is Load Balancing?

Load balancing is the method of distributing workloads, or incoming network traffic, between the available servers to handle traffic fluctuations and improve availability and reliability. Load balancing reduces the exertion on single servers, decreasing the latency experienced due to overloaded servers not being able to process requests.

A few people visiting a webpage might be fine, but the importance of load balancing becomes essential on a large scale, when high-traffic webpages and applications have to process millions of requests daily. Load balancing makes it possible for customers to access sites without delay. It improves efficiency and performance, which is crucial for the good functioning of applications and a positive customer experience.

What Are the Benefits of Load Balancing?

It has many significant benefits for systems and networks. These are the following.

A Boost to Performance and Responsiveness

Load balancing distributes traffic efficiently across multiple servers, ensuring that no single server is overwhelmed. This leads to better utilization of resources and improved overall performance. Reducing bottlenecks and latency enhances the responsiveness of applications, which is crucial for high-traffic applications.

Availability and Reliability

Load balancing can prevent costly downtime by constantly monitoring traffic and diverting traffic from failing or unresponsive servers to ensure constant availability. Distributing traffic across a pool of servers provides redundancy, reducing the risk of a single point of failure.

Easy Scalability

Load balancing allows easy horizontal scaling: additional servers can be added to the server farm to handle increased traffic. This makes it easier to scale applications as demand grows. Moreover, in cloud environments, load balancers can dynamically scale resources up and down – based on demand.

Cost-Efficiency

By ensuring that all servers are used efficiently, load balancing can reduce the need for overprovisioning, which is often the reason behind the extra costs.

Easy Maintenence and Management

Load balancing allows servers to be taken offline for maintenance without affecting availability, as traffic can be rerouted to other servers. It also provides a central point of control for managing traffic and monitoring server health, which simplifies the management of the infrastructure. Managed solutions can further simplify management for your team.

Elevated User Experience

Balancing the load allows faster response times and consistent performance, even in periods of peak traffic. Load balancers can ensure that a user’s traffic is consistently directed to the same server, which provides a seamless experience for applications that require session persistence. These create an enhanced user experience and customer satisfaction.

How Does Load Balancing Work?

To better understand how load balancing works, let’s look at what happens without it.

There are many situations in our daily lives that are similar to how load balancing works. Let’s take the restaurant example. Imagine a busy restaurant with many tables (customers/ requests) and waiters (servers). If there was no restaurant manager (load balancer), the following situation could arise: clients suddenly flood the restaurant, but only one waiter is available because the others are in the back, taking a break. The single waiter does everything to take all the orders and serve the food, but fulfilling all demands is impossible. The waiter is exhausted and can’t take all orders, which leads to unhappy customers complaining.

The situation is a lot different if the manager (load balancer) is there to supervise the situation. When the manager sees that there are too many tables/guests for one waiter to handle, he instructs the rest of the waiters to start taking orders. The manager (load balancer) divides the requests between the waiters to ensure continuous service without delay and avoid putting an unnecessarily high load on one of the waiters.

In the same way, load balancing in a network distributes incoming traffic, preventing the overload of a single server, which might lead to failure. Allocating incoming requests to a specific server happens based on a set of algorithms.

Static and Dynamic Algorithms

The load balancer distributes every single arriving request to a server based on dynamic and static algorithms.

- Static algorithms divide workloads without considering the state of the system at the moment. A static algorithm doesn’t recognize how the servers are performing, which is overloaded, and which aren’t. The reason for the differences is that users don’t spend the exact same amount of time on a given page. Some users might stay ten minutes, while others stay more. Static algorithms, like the round-robin, however, aren’t aware of this and keep distributing traffic to servers in a sequential order using the DNS (domain name system). Because of this, it’s easy to configure; however, it can result in inefficiencies.

Other static algorithms are the IP hash-based approach, for instance, which determines the user’s preferred server using HTTPS headers and IP information. This method benefits applications in e-commerce. The weighted round-robin method works by assigning weights to each server configured in DNS settings. This allows servers that are capable of handling more traffic to collect more traffic.

- Dynamic algorithms, on the other hand, make real-time decisions based on the current state of the system. They adjust the distribution of traffic based on factors like server load, response time, availability, and network conditions. They can divert traffic from overburdened servers to ones with more capacity, balancing the distribution. Dynamic algorithms include methods like the least-connections approach, which prioritizes servers with the fewest active transactions and sends traffic to servers with the fewest open connections. Other dynamic approaches include weighted least-connections (assuming that specific servers can handle more traffic than others), weighted response time (sending requests to the servers that can handle them the fastest), or the resource-based algorithm.

Because of all the variables, dynamic load balancing is more difficult and time-consuming to configure than static load balancing.

Types of Load Balancers

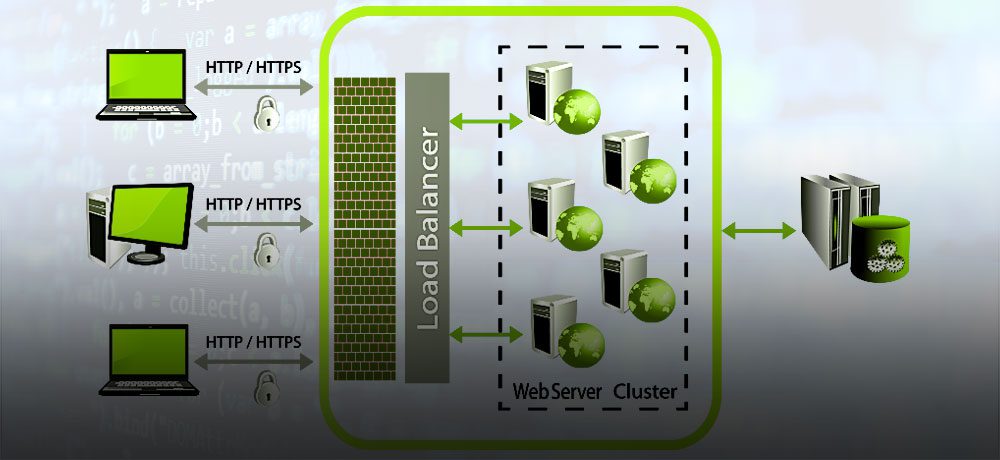

Load balancing is carried out by the load balancer, which can be hardware or software-based. Hardware-based load balancers are physical devices, while software-based solutions can run on servers, virtual machines, containers, or cloud environments.

- Hardware load balancers use dedicated hardware devices with built-in software intended to handle huge amounts of application traffic. These have a virtualization capability that allows the use of several instances of virtual load balancers on one device. Hardware load balancers are ideal for high-traffic workloads where performance and reliability are critical. They offer high performance but can be more expensive and somewhat harder to scale than software-based solutions.

- Software-based load balancers run on virtual machines, or often as application delivery controller functions (ACDs) to manage and distribute traffic across multiple servers. Those can include extras like caching, traffic shaping and compression. This solution is flexible, cost-effective, and easily scalable, making it ideal for cloud environments or businesses with varying traffic demands. While it may not offer the same degree of raw performance as hardware-based solutions, software load balancers are easier to configure, scale, and integrate with existing infrastructures.

Improved Reliability, Superior Performance

To sum it all up, load balancing is crucial to a well-performing IT infrastructure. Today, when every second of latency matters, load balancers ensure that your applications can meet the demands of your customers and offer a seamless experience. Using them can significantly improve your infrastructure’s performance, scalability, and overall reliability.

If you’re interested in a reliable, managed solution, check out our load balancing solutions at Volico Data Centers. Our engineers can help you choose the best option based on your business’s specific needs. Contact us today.